Converged networks with Fibre Channel over Ethernet and Data Center Bridging

Table Of Contents

4

and CEE equivalent terms. We use the term DCB to refer to an Ethernet infrastructure that implements at

least the minimum set of DCB standards to carry FCoE protocols.

A traffic class (TC) is a traffic management element. DCB enhances low-level Ethernet protocols to send

different traffic classes to their appropriate destinations. It also supports lossless behavior for selected TCs,

for example, those that carry block storage data. FCoE with DCB tries to mimic the lightweight nature of

native FC protocols. It does not incorporate TCP or even IP protocols. This means that FCoE is a non-

routable protocol meant for local deployment within a data center. The main advantage of FCoE is that

switch vendors can easily implement the logic for converting FCoE/DCB to native FC in high performance

switch silicon. FCoE solutions should cost less as they become widely used.

10 Gigabit Ethernet

One obstacle to using Ethernet for converged networks has been its limited bandwidth. As 10 Gigabit

Ethernet (10 GbE) technology becomes more widely used, 10 GbE network components will fulfill the

combined data and storage communication needs of many applications. With 10 GbE, converged Ethernet

switching fabrics handle multiple TCs for many data center applications. DCB-capable Ethernet gives you

maximum flexibility in selecting network management tools. As Ethernet bandwidth increases, fewer

physical links can carry more data (Figure 2).

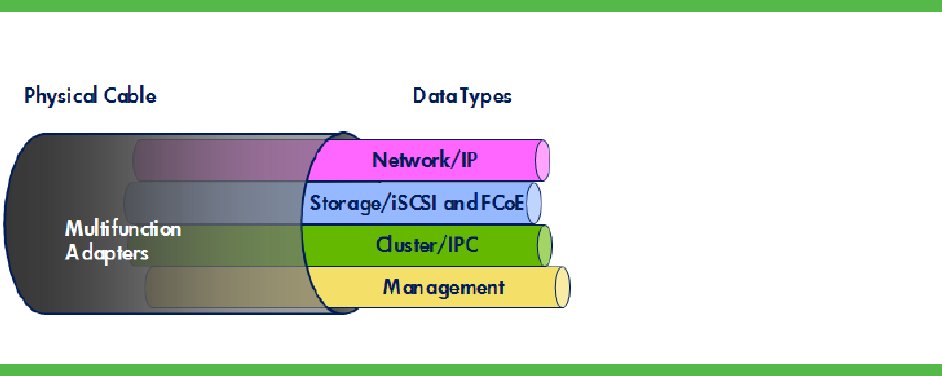

Figure 2. Multiple traffic types sharing the same link

HP Virtual Connect Flex-10

Virtual Connect (VC) Flex-10 technology lets you partition the Ethernet bandwidth of each 10 Gb Ethernet

port into up to four FlexNICs. The FlexNICs function and appear to the system as discrete physical NICs,

each with its own PCI function and driver instance. The partitioning must be in increments of 100 Mb.

While FlexNICs share the same physical port, traffic flow for each is isolated with its own MAC address

and VLAN tags between the FlexNIC and associated VC Flex-10 module. Using the VC Manager CLI or

GUI, you can set and control the transmit bandwidth available to each FlexNIC according to server

workload needs. With the VC Flex-10 modules now available, each dual-port Flex-10 enabled server or

mezzanine card supports up to eight FlexNICs, four on each physical port. Each VC Flex-10 module can

support up to 64 FlexNICs.

Flex-10 adds LAN convergence to VC’s virtual I/O technology. It aggregates up to four separate traffic

streams into a single 10 Gb pipe connecting to VC modules. VC then routes the frames to the appropriate

external networks. This lets you consolidate and better manage physical connections, optimize bandwidth,

and reduce cost.